The Nyquist Theorem

The sampling rate determines the highest frequency you can

represent with a digital signal. The Nyquist Theorem (named

after the Swedish-born researcher, Harry Nyquist) states that...

the sampling rate must be at least twice as high as

the highest frequency you want to represent.

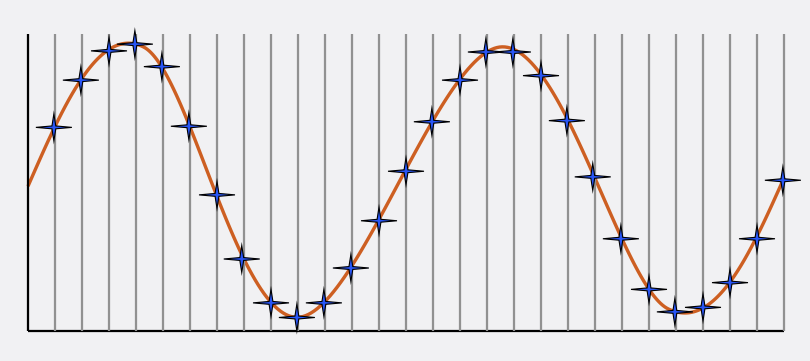

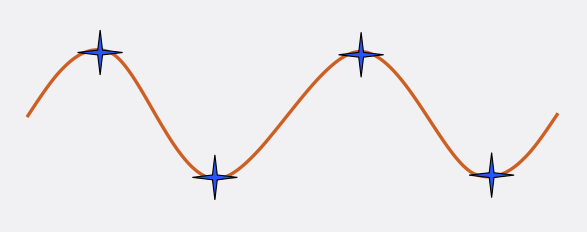

One way to think about this is to imagine a high-frequency sine

wave for which we sample exactly two points per cycle: the crest

(top) of the waveform, and the trough (bottom) of the waveform.

The illustration shows two cycles of a sine wave, with each cycle

represented by two sample points (the blue stars).

The sample points shown are sufficient to allow us to reconstruct

the original analog audio signal. Since there are two sample points

for each cycle, that means that the sampling rate (or frequency) is

twice the frequency of the sine wave. (So if the sine wave is

10,000 Hz, the sampling rate is 20,000 Hz.) That fits with the

requirement of the Nyquist Theorem that the sampling rate be at

least twice as high as the highest frequency in the signal.

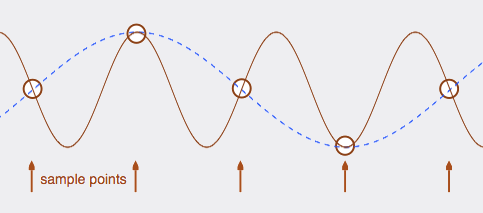

The frequency shown above is critically sampled, meaning

that if you had any fewer sample points per cycle, you would not be

able to represent the analog signal accurately. This frequency,

which is exactly half of the sampling rate, is called the

Nyquist frequency.

(You might be skeptical about the claim, made above, that two

samples points per cycle allows you to reconstruct the sine wave.

Wouldn’t a reconstruction create straight lines between the

points? In fact, the process of converting a digital signal back to

analog form includes an analog “smoothing” filter that

causes the output to closely approximate the original curved

shape.)